How Directors Must Balance Innovation and Risk in the Age of Artificial Intelligence

A Singapore Perspective on AI Governance

Introduction: The Board's AI Awakening

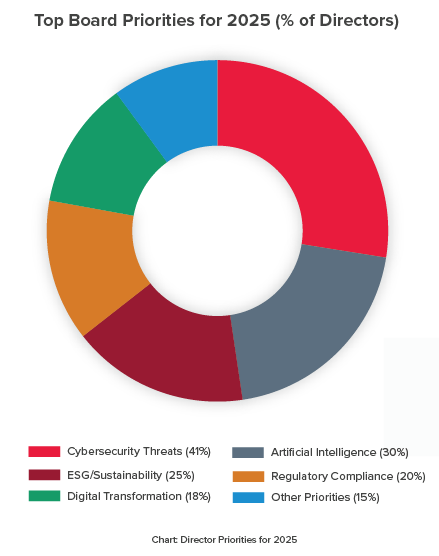

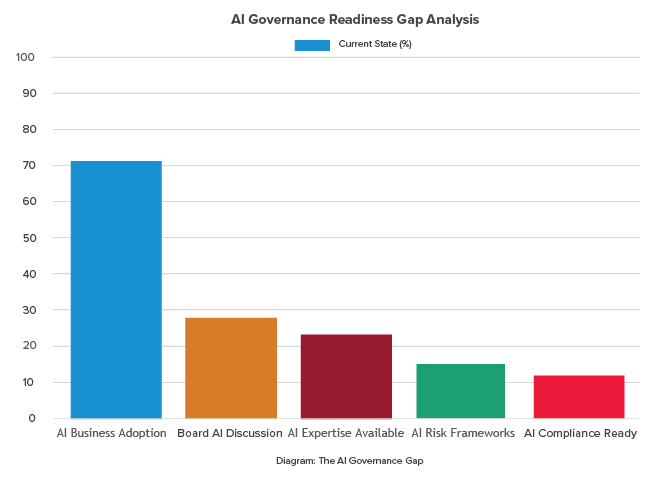

Corporate boardrooms across Singapore face an unprecedented challenge: governing technologies that evolve faster than traditional oversight mechanisms can adapt. Artificial intelligence, once confined to research laboratories, now drives strategic business decisions across our city-state's economy. With 72% of businesses globally having adopted AI technologies as of 2024, and AI's projected contribution of 21% to U.S. GDP by 2030, Singapore's position as a regional technology hub makes effective AI governance particularly critical for our corporate boards.

The Singapore Institute of Directors (SID), in its continuing emphasis on board excellence and corporate governance, has increasingly highlighted AI governance as a crucial directorial responsibility. This focus aligns with Singapore's Smart Nation initiative and the national AI strategy, which positions the country as a leader in developing and deploying AI responsibly. For Singapore directors, this represents both an opportunity and an obligation: to lead organisations that capture AI's competitive advantages while managing its inherent risks within our unique regulatory and business environment.

The stakes are particularly high for Singapore. As a small, open economy dependent on innovation and international trust, our corporate boards must demonstrate world-class AI governance to maintain our competitive position. The Monetary Authority of Singapore (MAS) and the Infocomm Media Development Authority (IMDA) have already begun establishing AI governance frameworks, signalling that regulatory expectations will only intensify. For corporate directors, this reality demands a fundamental shift from reactive oversight to proactive AI governance that balances innovation with responsibility.

The Singapore Context: Where Innovation Meets Regulation

Singapore's AI Governance Landscape

Singapore has positioned itself at the forefront of responsible AI development in Asia. The IMDA's Model AI Governance Framework, first released in 2019 and updated in 2020, provides organisations with practical guidance on implementing responsible AI. This framework emphasises transparency, explainability, fairness, and human oversight—principles that should guide board-level AI governance discussions.

The Personal Data Protection Commission (PDPC) has also issued the Model AI Governance Framework for Generative AI, recognising the unique challenges posed by large language models and generative systems. For Singapore boards, these frameworks are not merely compliance checklists but strategic tools for building stakeholder trust and competitive advantage.

MAS has been particularly active in the financial services sector, establishing the Veritas initiative to promote the adoption of fairness, ethics, accountability and transparency in the use of AI and data analytics. Directors of financial institutions must be especially attuned to these sector-specific requirements while maintaining awareness of cross-sector AI governance principles.

The Double-Edged Sword: AI as Both Solution and Problem

AI's Cybersecurity Promise

Singapore's cybersecurity landscape presents unique challenges given our status as a major financial center and critical infrastructure hub. AI technologies offer transformative solutions by enabling security teams to analyse threat indicators from millions of endpoints exponentially faster than traditional methods allow. Advanced analytics powered by AI can execute two to three times more threat hunts per analyst, significantly improving an organisation's defensive posture.

Large Language Models provide particular value in threat detection and remediation by continuously learning from updated data and security assessments. These systems can identify emerging cyberattacks before human teams detect them, while analysing alerts and system logs to produce optimal remediation strategies.

For Singapore organisations facing a severe cybersecurity talent shortage, AI offers crucial cost reduction benefits. Rather than requiring proportional increases in skilled personnel as data volumes grow, AI augments existing analysts' capabilities, allowing organisations to expand their security capacity without dramatically increasing labour costs—particularly important given Singapore's tight labour market and high salary expectations.

The Emerging Threat Landscape

However, AI's accessibility has fundamentally altered Singapore's threat landscape. Cybercriminals now leverage AI to create increasingly sophisticated social engineering campaigns targeting our multilingual, digitally-connected population. The technology enables faster identification of high-value targets among Singapore's concentration of wealthy individuals and multinational corporations while reducing the cost of cyberattack tools.

More concerning are attack vectors specific to AI systems themselves. Data poisoning attacks corrupt underlying AI model data to manipulate outputs, while prompt injection attacks use engineered inputs to bypass security guardrails. These novel techniques exploit AI's inherent vulnerabilities rather than traditional software flaws.

Nation-state actors represent a particularly sophisticated threat to Singapore given our geopolitical position. These adversaries actively explore AI tools to enhance their attack capabilities, creating an arms race dynamic where defensive AI implementations must continuously evolve to counter increasingly AI-enhanced offensive capabilities.

Regulatory Reality: Singapore's Proactive Approach

The Accelerating Regulatory Timeline

Unlike many jurisdictions that take a wait-and-see approach to technology regulation, Singapore has adopted a proactive stance on AI governance. This reflects our broader regulatory philosophy of enabling innovation while managing risks—what MAS calls "technology-neutral but risk-focused" regulation.

The IMDA's Model AI Governance Framework provides a risk-based approach that organisations can adapt to their specific contexts. While not legally mandated for most sectors, this framework increasingly represents the expected standard of care for Singapore boards. Directors who ignore these guidelines do so at their organisation's peril, as regulatory expectations solidify around these principles.

MAS has gone further in the financial sector, with clear expectations that boards oversee AI risk management frameworks. The authority's technology risk management guidelines explicitly address AI systems, requiring financial institutions to implement appropriate governance structures, risk assessment processes, and control mechanisms.

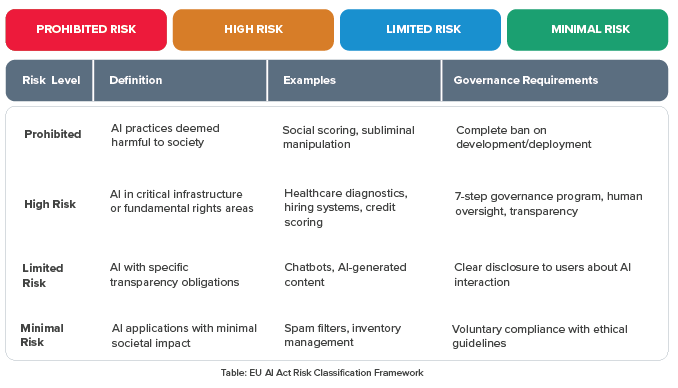

The PDPC's framework for generative AI adds another layer of governance expectations, particularly around data protection, transparency, and accountability. Singapore boards must navigate these overlapping frameworks while maintaining awareness of international regulations like the EU AI Act, which may affect organisations with European operations or customers.

Compliance as Competitive Advantage

Singapore's regulatory approach creates opportunities for forward-thinking boards. Organisations that embrace recommended governance practices early position themselves advantageously in several ways:

Trust and Reputation: In Singapore's relationship-driven business environment, demonstrating responsible AI governance builds stakeholder confidence. This matters particularly for organisations serving regional markets from Singapore, where the city-state's regulatory reputation enhances commercial credibility.

Regulatory Readiness: While current AI governance frameworks are largely voluntary, mandatory requirements are inevitable. Early adopters avoid the disruption and cost of retrofitting governance structures when regulations tighten.

Talent Attraction: Singapore competes globally for AI talent. Organisations with robust AI governance frameworks appeal to ethically-minded professionals who increasingly consider organisational values when making employment decisions.

Market Access: As international AI regulations emerge, Singapore organisations with strong governance practices will find it easier to access regulated markets, particularly in Europe and other jurisdictions implementing strict AI laws.

Board Readiness: Five Pillars for Singapore Directors

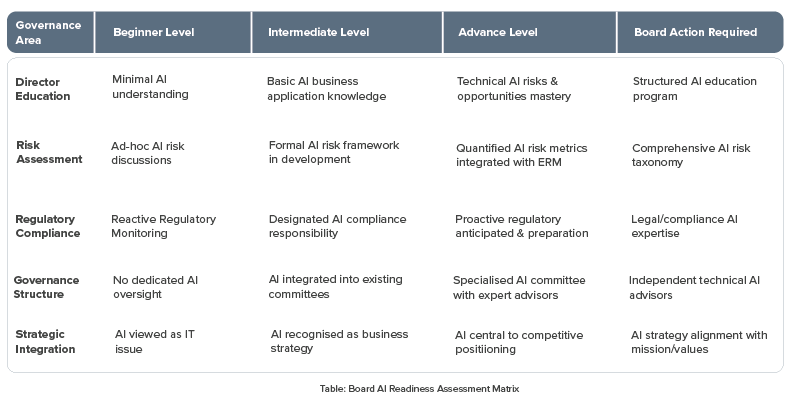

Pillar One: Director Education and Singapore Context

SID emphasises continuous director education as fundamental to effective board service. For AI governance, this principle becomes especially critical. Singapore directors must understand not only AI's technical fundamentals but also our unique regulatory landscape and regional competitive dynamics.

Effective AI oversight requires directors to grasp AI's business applications, inherent risks, and limitations within the Singapore context. The "black box" nature of many AI systems creates particular governance challenges, as traditional oversight assumes transparency and explainability.

Singapore boards should invest in structured AI education programs. SID offers director development programs that increasingly incorporate technology governance, while organisations like the Singapore Computer Society provide technical training that can be adapted for board-level learning. The Singapore Management University and National University of Singapore also offer executive programs addressing AI governance.

Directors should also engage with Singapore's AI governance ecosystem, including:

- IMDA's AI Governance and Ethics Advisory Committee

- PDPC's data protection and AI guidance

- Industry-specific AI governance working groups

- Regional AI governance forums and conferences

Pillar Two: Strategic Positioning in Singapore's Economy

Singapore directors must assess AI's strategic implications within our unique economic context. As a small, open economy heavily dependent on services exports and regional business, AI adoption affects competitive positioning differently than in larger, more diversified economies.

Key strategic considerations for Singapore boards include:

- Regional Competition: How are competitors in Singapore and across Southeast Asia deploying AI? What risks does maintaining the status quo create when regional rivals move aggressively into AI-driven business models?

- Smart Nation Alignment: How does the organisation's AI strategy align with Singapore's national digitalisation agenda? Are there opportunities to participate in government AI initiatives or public-private partnerships?

- Talent and Innovation: Given Singapore's limited talent pool, how can AI augment human capabilities rather than simply replacing workers? What partnerships with local universities and research institutions could enhance AI capabilities?

- Cross-Border Operations: For organisations operating regionally from Singapore, how do varying AI regulatory regimes across ASEAN affect governance requirements? What standards should the board establish for AI deployment across different jurisdictions?

Pillar Three: Risk Assessment and Singapore-Specific Threats

Modern Singapore boards must simultaneously evaluate AI's opportunities and operational risks within our threat environment. Nation-state actors and cybercriminal groups increasingly target Singapore given our concentration of financial services, logistics hubs, and critical infrastructure.

The provenance of training data becomes critical for Singapore organisations. AI systems trained on data containing personal information without proper authorisation expose organisations to significant liabilities under Singapore's Personal Data Protection Act. The PDPC has emphasised that data protection obligations apply throughout the AI lifecycle, from training data collection through model deployment and ongoing operations.

Singapore boards should ensure comprehensive due diligence for AI suppliers, particularly given our heavy reliance on imported technology. Key considerations include:

- Data Sovereignty: Where is training data stored and processed? Does this create jurisdictional risks or conflicts with Singapore's data protection requirements?

- Supply Chain Security: What assurances exist regarding AI supply chain integrity? Are there dependencies on suppliers from jurisdictions that might create geopolitical risks?

- Vendor Risk Management: As vendors increasingly disclaim AI-related risks in licensing agreements, what contractual protections and governance oversight does the organisation maintain?

- Third-Party Audits: Has the AI system undergone independent security assessments? Do audit rights exist for ongoing verification?

Pillar Four: Regulatory Compliance and Disclosure

Singapore's evolving AI regulatory landscape creates disclosure obligations that boards must anticipate. While many current frameworks remain voluntary, the trajectory toward mandatory requirements is clear.

Directors should ensure their organisations maintain:

- Framework Alignment: Documentation demonstrating alignment with IMDA's Model AI Governance Framework, including how the organisation addresses each principle and why any deviations are justified.

- Sector-Specific Compliance: For regulated industries, evidence of compliance with sector-specific AI governance requirements from MAS, other regulators, or industry bodies.

- Data Protection Integration: Clear processes showing how AI systems comply with the Personal Data Protection Act, including data protection impact assessments for high-risk AI applications.

- Stakeholder Communication: Transparent disclosure to customers, employees, and other stakeholders about AI use, consistent with the PDPC's guidance on AI transparency.

- Incident Response: Protocols for identifying, managing, and disclosing AI-related incidents, aligned with Singapore's cybersecurity incident reporting requirements.

Pillar Five: Governance Structure for Singapore Organisations

Traditional board structures may require adaptation for effective AI oversight in Singapore's context. SID has long emphasised that governance structures should fit organisational needs rather than follow rigid templates, and this principle applies equally to AI governance.

Options for Singapore boards include:

- Full Board Oversight: For organisations where AI represents a strategic imperative, the full board maintains active AI governance oversight, with regular presentations from management on AI initiatives, risks, and performance.

- Technology Committee: Some larger Singapore organisations establish dedicated technology or innovation committees with AI governance as a key responsibility. This allows for deeper technical engagement while reporting to the full board on strategic matters.

- Integration with Existing Committees: Many organisations integrate AI governance into existing structures. Audit committees might oversee AI risk management and controls, while risk committees address AI-specific threats. Nominating committees consider AI expertise in board composition.

- Advisory Boards: Some Singapore organisations establish technical advisory boards composed of AI experts who provide specialised guidance to management and the board without formal directorial responsibilities.

Regardless of structure, Singapore boards should consider:

- Board Composition: Does the board possess sufficient technology expertise for effective AI oversight? Should the organisation recruit directors with AI backgrounds, or can existing directors develop necessary competencies through education?

- Management Accountability: Who in management owns AI governance? Is there clear accountability for AI risk management, ethics, and compliance?

- External Expertise: Should the organisation engage independent AI advisors to supplement board and management capabilities? What qualifications and independence requirements should apply?

- Regional Coordination: For Singapore-headquartered organisations with regional operations, how does AI governance cascade to subsidiaries? What decisions require Singapore board approval versus local management discretion?

Strategic Questions for Singapore's Modern Board

Effective AI governance requires Singapore directors to ask probing questions that reveal both opportunities and risks within our unique context: cc

| Categories | Focus Questions |

|---|---|

| Strategic Position |

|

| Risk Management |

|

| Regulatory Compliance |

|

| Operational Oversight |

|

| Board Capability |

|

The Path Forward: Principles for Singapore's AI-Ready Boards

Based on SID's governance principles and Singapore's regulatory approach, several themes should guide board AI governance:

Velocity with Precision: Singapore's competitive advantage depends on moving quickly to capture opportunities while maintaining rigorous risk management. This balance requires sophisticated oversight that avoids both reckless speed and paralysing caution—consistent with our national approach of enabling innovation while managing risks.

Human-Centered Approach: Ensure AI systems serve human objectives and maintain appropriate human oversight. This principle resonates particularly in Singapore's context, where government policy explicitly emphasises that AI should augment rather than simply replace human workers.

Transparency and Accountability: Maintain clear communication about AI capabilities, limitations, and risks with all stakeholders. This transparency builds trust essential in Singapore's relationship-driven business environment and aligns with IMDA and PDPC expectations.

Continuous Learning: Recognise that AI governance requires ongoing adaptation as technologies and regulatory frameworks evolve. SID's emphasis on continuous director development applies with particular force to AI governance.

Regional Leadership: As a regional business hub, Singapore organisations have opportunities to demonstrate governance excellence that builds competitive advantage across Southeast Asian markets. Directors should view robust AI governance not as a compliance burden but as a market differentiator.

Regulatory Engagement: Singapore's regulatory approach emphasises co-creation and consultation. Boards should encourage management to engage proactively with regulators, industry bodies, and governance initiatives rather than waiting for mandates.

Conclusion: The Governance Imperative for Singapore

The integration of AI into business operations represents one of the most significant technological transitions in modern corporate history. For Singapore, with our concentration of financial services, logistics operations, and technology businesses, effective AI governance is not optional—it's essential to maintaining our competitive position as a trusted regional hub.

Boards that treat AI governance as a future consideration risk being overwhelmed by regulatory requirements, competitive disadvantages, and unforeseen risks. Conversely, those that invest in AI literacy, governance frameworks, and oversight capabilities position their organisations to capture AI's transformative benefits while managing inherent risks responsibly.

The window for proactive AI governance is narrowing. Singapore's regulators are establishing expectations, competitors are advancing their AI capabilities, and stakeholders increasingly demand transparency about AI use. Boards must act with the velocity and precision that characterie Singapore's approach to innovation—moving quickly to establish robust governance while avoiding the pitfalls of poorly managed AI deployment.

For Singapore directors, the question is not whether to engage with AI governance, but how quickly and effectively they can develop the capabilities necessary to discharge their fiduciary duties in an AI-transformed business landscape. The organisations that answer this question successfully will be those whose boards embrace AI governance as a strategic imperative rather than a technical afterthought.

Singapore's position as a global technology leader depends significantly on our corporate governance reputation. By demonstrating excellence in AI governance, Singapore boards can enhance not only their individual organisations' competitive positions but also our nation's standing as a responsible, innovative business hub. This is the dual opportunity and obligation facing Singapore's modern board: to govern AI effectively while contributing to national competitive advantage.

----------------

References

Singapore-Specific Sources:

- Infocomm Media Development Authority. "Model AI Governance Framework." Second Edition, January 2020.

- Personal Data Protection Commission. "Model AI Governance Framework for Generative AI." Singapore, 2024.

- Monetary Authority of Singapore. "Principles to Promote Fairness, Ethics, Accountability and Transparency (FEAT) in the Use of AI and Data Analytics in Singapore's Financial Sector." November 2018.

- Singapore Institute of Directors. "Board Guide on Technology Governance." Singapore: SID, 2023.

- Smart Nation Singapore. "National AI Strategy." Singapore Government, 2019.

- Cyber Security Agency of Singapore. "Singapore Cyber Landscape 2023." Singapore, 2024.

- Personal Data Protection Commission. "Guide to Data Protection Impact Assessments." Singapore, 2021.

- Singapore Institute of Directors. "Code for Directors." Singapore: SID, 2022.

- Monetary Authority of Singapore. "Technology Risk Management Guidelines." Singapore, 2021.

- National Association of Corporate Directors and Internet Security Alliance. "AI in Cybersecurity: Special Supplement to the NACD-ISA Director's Handbook on Cyber-Risk Oversight." Arlington, VA: NACD, 2025.

- Singla, Alex, Alexander Sukharevsky, Lareina Yee, and Michael Chui. "The state of AI in early 2024: Gen AI adoption spikes and starts to generate value." McKinsey & Company, May 30, 2024.

- European Union. "Artificial Intelligence Act." Official Journal of the European Union, 2024.

- National Institute of Standards and Technology. "AI Risk Management Framework (AI RMF 1.0)." January 2023.

- UK National Cyber Security Centre. "The near-term impact of AI on the cyber threat." January 24, 2024.

- Bowen, Ed, Wendy Frank, Deborah Golden, Michael Morris, and Kieran Norton. "Cyber AI: Real defense." Deloitte Insights, December 7, 2021.