Only 10% of companies have successfully scaled their generative AI implementations despite the growing AI enthusiasm. McKinsey's research expresses how organisations struggle with establishing reliable AI Management Systems (AIMS).

Security and privacy concerns top the list of barriers to AI adoption. CIOs report that security threats pose major challenges for 57% of organisations. Policy-related problems hinder progress for 77% of CISOs. The ISO Standard for AI (ISO/IEC42001:2023) now offers a complete framework that enables responsible AI implementation.

The new standard's 39 controls help organisations address their AI deployment challenges effectively. These controls cover everything from policies to impact analysis and third-party relationships. Organisations can build trustworthy AI systems with proper security and privacy measures by combining this framework with ISO 27001 and ISO 27701 standards.

Understanding ISO/IEC 42001:2023 Framework Fundamentals

The ISO/IEC 42001:2023 framework helps organisations manage their Artificial Intelligence systems with a focus on ethical use, transparency, and accountability. This innovative standard, released in December 2023, guides organisations how to build trustworthy AI management systems that operate responsibly during development, deployment, and operation1.

Core Components of the AI Management System (AIMS)

ISO 42001 defines an AI Management System (AIMS) as a set of connected elements that establish policies, objectives, and processes for responsible AI development and use2. The standard uses the Plan-Do-Check-Act method and has several significant components:

How ISO 42001 Is Different from Other AI Standards

Most AI regulations deal with policy and ethics, but ISO 42001 provides businesses a practical framework to align their AI operations with risk management and compliance best practices4. The standard goes beyond basic security measures by adding AI-specific controls and considerations5.

ISO 42001 stands out with its detailed structure of 10 clauses and 4 annexes. The framework includes 38 controls across 10 control objectives3, providing organisations practical guidance to implement responsible AI practices.

While the NIST AI Risk Management Framework takes a broader approach, ISO 42001 zeroes in on organisational AI governance and enables organisations to become certified4. This certification path is a great way to gain formal recognition for following AI governance standards, which builds stakeholder trust5.

Key Terminology and Definitions for Implementation

A good grasp of key terms helps organisations implement the ISO 42001 framework effectively. Requirement 3 creates a standard vocabulary that gives all stakeholders the same understanding of critical terms6.

The term "organisation" means a person or group that has functions, responsibilities, authorities, and relationships to achieve objectives. This broad definition works for organisations of all sizes, from single entrepreneurs to large corporations6.

An "interested party" means any person or organisation that affects, is affected by, or perceives itself as affected by AIMS decisions. This includes customers, employees, suppliers, regulators, and the community6.

The "management system" refers to connected elements that establish policies, objectives, and processes to achieve those objectives. For AI, this means taking a structured approach to develop, provide, or use AI systems responsibly2.

These standard definitions are the foundations of effective AI management. They help stakeholders navigate complexities and ensure ethical, responsible AI development practices.

Assessing Your Organisation's AI Readiness

Organisations must evaluate their readiness before implementing AIMS. Research shows that 95% of organisations haven't implemented any AI governance framework, though 82% identify it a pressing priority7. This review phase identifies risks, key stakeholders, and governance gaps needed for ISO/IEC42001:2023 compliance.

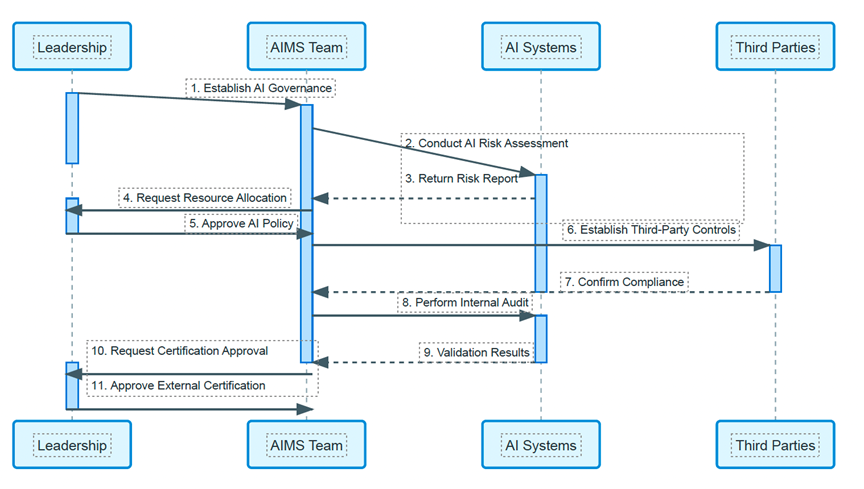

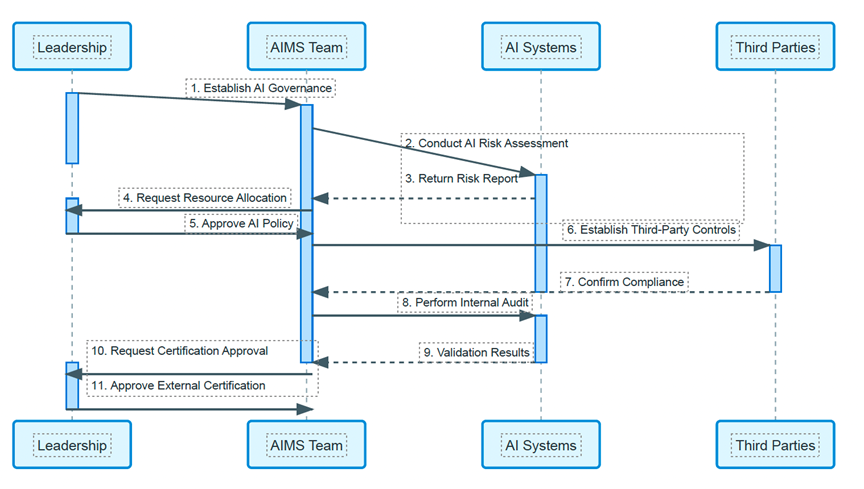

Figure 1: ISO/IEC 42001:2023 Implementation Sequence

Conducting an AI Risk Assessment

A successful AI risk assessment needs systematic identification and review of risks linked to AI implementation. The Plan-Do-Check-Act (PDCA) methodology provides a structured approach to this process8:

AI systems bring unique challenges including algorithmic bias, transparency issues, and accountability concerns10. Data integrity and security stand out as the biggest barriers to new AI solutions. Studies show that 41% of executives face data quality issues and 37% struggle with bias detection and mitigation7.

Identifying Stakeholders and Responsibilities

Stakeholders include anyone affected by, interested in, or having control over an AI system10. The right stakeholder identification ensures all points of view and concerns shape the AI governance process.

Key stakeholder groups typically include:

Gap Analysis: Current vs. Required AI Governance

Organisations preparing for ISO 42001 certification need a gap assessment (or readiness assessment). This review highlights the difference between current practices and the standard's requirements12.

The gap analysis should review:

Note that your organisation's implementation effort for ISO 42001 readiness depends on your existing management processes and controls12. Strong security controls already in place will ease the implementation process.

Building Your ISO 42001 Implementation Roadmap

Once your organisation's assessment is complete, the next vital step toward ISO 42001 compliance is creating a well-laid-out implementation roadmap. A good plan will help you build your AIMS that aligns with your company's goals.

Establishing Leadership Commitment and AI Policy

Leadership's steadfast dedication is the lifeblood of successful ISO/IEC42001:2023 implementation. Top management should actively involve themselves in developing, implementing, and improving the AIMS. This commitment includes:

Creating a Phased Implementation Timeline

Breaking implementation into phases helps you adopt ISO 42001 step by step. Here's how to structure your timeline:

Resource Allocation and Budget Planning

Smart resource allocation is essential for successful ISO 42001 implementation. Your organisation should strategically allocate financial, human, physical, and technological resources across AI projects. Key considerations include:

This implementation roadmap will help your organisation build resilient infrastructure for ISO 42001 compliance and encourage responsible AI development and use.

Implementing Core AIMS Controls and Processes

Your organisation needs core controls across several critical domains to implement ISO 42001 effectively when setting up your AIMS. These controls help you manage AI ethically and effectively throughout its lifecycle.

Data Governance and Quality Management

Trustworthy AI systems depend on effective data governance as their lifeblood. Organisations must document information about data resources employed for AI systems under ISO/IEC42001:202313. This process ensures data quality, security, and regulatory compliance.

A robust data governance plan needs:

AI System Life Cycle Management

AI systems need constant attention throughout their lifecycle. ISO 42001 requires organisations to define and document verification and validation measures with specific usage criteria15. The process covers planning, testing, monitoring, performance optimisation, and final system retirement.

Your lifecycle management should include documented procedures for each phase from development to retirement. The system should automatically record events logs over its lifetime16. These logs must record usage periods, reference databases, and individuals involved in verification processes.

Third-Party AI Provider Management

Most organisations work with external partners to implement AI. ISO 42001 requires processes that ensure services or products from suppliers align with responsible AI development principles17. You need to conduct due diligence, monitor continuously, and regularly evaluate third-party AI providers.

Clear documentation of roles and responsibilities facilitates effective supplier relationship management. Detailed records of supplier evaluations, agreements, and performance assessments ensure accountability and transparency.

Documentation and Record-Keeping Requirements

A complete documentation system forms the foundation of AIMS compliance. Your records must be "legible, easily identifiable, and retrievable" with clear procedures for identification, storage, protection, retrieval, retention, and disposal18.

ISO 42001 mandates documentation of AI system design, development plans, deployment strategies, and technical specifications15. Your organisation should maintain an AI system technical documentation portfolio that is accessible to stakeholders, users, partners, and supervisory authorities.

Version control prevents the use of outdated information and ensures that only the most current versions are accessible to users19.

Table 1: Benefits of ISO42001 implementation

Measuring and Validating ISO 42001 Compliance

Proving your AI Management System is effective requires resilient measurement and compliance checks to ensure AI operates reliably. A good evaluation combines internal reviews with preparation for external certification, creating an ongoing improvement cycle.

Internal Audit Procedures for AIMS

Internal audits act as key practice runs before formal certification and help spot remaining problems in your AI Management System setup. These audits follow a well-laid-out five-phase approach: scoping, planning, assessment, reporting, and remediation. Here's how to run effective internal audits:

Performance Metrics and KPIs for Trustworthy AI

You need reliable, valid and practical measurement tools to monitor AIMS effectiveness and compare different setup strategies. These measurement qualities matter:

Preparing for External Certification

After thorough internal prep work, the next step would be to work with an accredited certification body for formal ISO 42001 verification. The certification process involves these steps:

Conclusion

ISO 42001 provides organisations with a well-laid-out approach to manage artificial intelligence responsibly. This comprehensive framework tackles major challenges businesses face, especially when dealing with security threats and policy issues that often hinder AI adoption.

Organisations that follow ISO 42001 gain the following important benefits:

Regular measurement and verification are the lifeblood of a working AIMS. Organisations can track their progress and identify areas to improve through internal audits and clear performance metrics. External certification provides tangible validation of these efforts and shows your dedication to responsible AI practices.

As AI technology continues to evolve, management systems that comply with ISO 42001 will become crucial for organisations seeking to build trust while maximising the benefits of AI. This standard empowers you to navigate AI challenges with confidence and ensures ethical, responsible implementation.

References

Security and privacy concerns top the list of barriers to AI adoption. CIOs report that security threats pose major challenges for 57% of organisations. Policy-related problems hinder progress for 77% of CISOs. The ISO Standard for AI (ISO/IEC42001:2023) now offers a complete framework that enables responsible AI implementation.

The new standard's 39 controls help organisations address their AI deployment challenges effectively. These controls cover everything from policies to impact analysis and third-party relationships. Organisations can build trustworthy AI systems with proper security and privacy measures by combining this framework with ISO 27001 and ISO 27701 standards.

Understanding ISO/IEC 42001:2023 Framework Fundamentals

The ISO/IEC 42001:2023 framework helps organisations manage their Artificial Intelligence systems with a focus on ethical use, transparency, and accountability. This innovative standard, released in December 2023, guides organisations how to build trustworthy AI management systems that operate responsibly during development, deployment, and operation1.

Core Components of the AI Management System (AIMS)

ISO 42001 defines an AI Management System (AIMS) as a set of connected elements that establish policies, objectives, and processes for responsible AI development and use2. The standard uses the Plan-Do-Check-Act method and has several significant components:

- Governance Framework: Sets clear roles, responsibilities, and oversight mechanisms for AI systems that promote accountability across the organisation

- Risk Management: Implements processes to identify, assess, and reduce AI-specific risks through continuous monitoring of the AIMS lifecycle

- AI System Lifecycle Management: Covers all AI development aspects from planning and testing to fixing issues

- Data Governance: Protects the integrity, security, and privacy of AI system data

- Third-Party Management: Applies controls to suppliers to align with the organisation's principles and methods

How ISO 42001 Is Different from Other AI Standards

Most AI regulations deal with policy and ethics, but ISO 42001 provides businesses a practical framework to align their AI operations with risk management and compliance best practices4. The standard goes beyond basic security measures by adding AI-specific controls and considerations5.

ISO 42001 stands out with its detailed structure of 10 clauses and 4 annexes. The framework includes 38 controls across 10 control objectives3, providing organisations practical guidance to implement responsible AI practices.

While the NIST AI Risk Management Framework takes a broader approach, ISO 42001 zeroes in on organisational AI governance and enables organisations to become certified4. This certification path is a great way to gain formal recognition for following AI governance standards, which builds stakeholder trust5.

Key Terminology and Definitions for Implementation

A good grasp of key terms helps organisations implement the ISO 42001 framework effectively. Requirement 3 creates a standard vocabulary that gives all stakeholders the same understanding of critical terms6.

The term "organisation" means a person or group that has functions, responsibilities, authorities, and relationships to achieve objectives. This broad definition works for organisations of all sizes, from single entrepreneurs to large corporations6.

An "interested party" means any person or organisation that affects, is affected by, or perceives itself as affected by AIMS decisions. This includes customers, employees, suppliers, regulators, and the community6.

The "management system" refers to connected elements that establish policies, objectives, and processes to achieve those objectives. For AI, this means taking a structured approach to develop, provide, or use AI systems responsibly2.

These standard definitions are the foundations of effective AI management. They help stakeholders navigate complexities and ensure ethical, responsible AI development practices.

Assessing Your Organisation's AI Readiness

Organisations must evaluate their readiness before implementing AIMS. Research shows that 95% of organisations haven't implemented any AI governance framework, though 82% identify it a pressing priority7. This review phase identifies risks, key stakeholders, and governance gaps needed for ISO/IEC42001:2023 compliance.

Figure 1: ISO/IEC 42001:2023 Implementation Sequence

Conducting an AI Risk Assessment

A successful AI risk assessment needs systematic identification and review of risks linked to AI implementation. The Plan-Do-Check-Act (PDCA) methodology provides a structured approach to this process8:

- Plan: Identify potential AI risks and develop mitigation strategies

- Do: Implement the selected risk mitigation strategies

- Check: Monitor and evaluate the effectiveness of these strategies

- Act: Make necessary adjustments to improve risk management processes

AI systems bring unique challenges including algorithmic bias, transparency issues, and accountability concerns10. Data integrity and security stand out as the biggest barriers to new AI solutions. Studies show that 41% of executives face data quality issues and 37% struggle with bias detection and mitigation7.

Identifying Stakeholders and Responsibilities

Stakeholders include anyone affected by, interested in, or having control over an AI system10. The right stakeholder identification ensures all points of view and concerns shape the AI governance process.

Key stakeholder groups typically include:

- Internal stakeholders: Developers, engineers, product managers, compliance teams, and leadership

- External stakeholders: Customers, suppliers, regulators, and broader community members

Gap Analysis: Current vs. Required AI Governance

Organisations preparing for ISO 42001 certification need a gap assessment (or readiness assessment). This review highlights the difference between current practices and the standard's requirements12.

The gap analysis should review:

- Existing governance structures versus ISO 42001 requirements

- Current risk management practices versus those needed for AIMS

- Documentation and record-keeping systems versus standard requirements

- Staff training and awareness levels versus what's needed for compliance

Note that your organisation's implementation effort for ISO 42001 readiness depends on your existing management processes and controls12. Strong security controls already in place will ease the implementation process.

Building Your ISO 42001 Implementation Roadmap

Once your organisation's assessment is complete, the next vital step toward ISO 42001 compliance is creating a well-laid-out implementation roadmap. A good plan will help you build your AIMS that aligns with your company's goals.

Establishing Leadership Commitment and AI Policy

Leadership's steadfast dedication is the lifeblood of successful ISO/IEC42001:2023 implementation. Top management should actively involve themselves in developing, implementing, and improving the AIMS. This commitment includes:

- Creating a clear AI governance vision that aligns with strategic goals

- Taking part in the implementation process

- Promoting responsible AI across the organisation

- Ensuring the implementation has enough resources

- Showing your organisation's core values and objectives

- Providing a structure to set AI-related goals

- Making commitments to regulatory compliance and continuous improvement

- Being documented, shared internally, and accessible to key stakeholders

Defining Scope and Boundaries of Your AIMS

A clear scope helps your AIMS work effectively under ISO 42001:2023. This step makes sure you properly govern your AI models, data sources, and decision-making. When setting your scope:- List which AI applications and systems you will govern

- Choose which AI lifecycle stages you will cover

- Write down how you will work with outside AI tools and data

- Set your geographical and regulatory limits

Creating a Phased Implementation Timeline

Breaking implementation into phases helps you adopt ISO 42001 step by step. Here's how to structure your timeline:

- Phase 1: Foundation – Gap analysis, team assembly, and leadership approval

- Phase 2: Development – Policy creation, risk assessment, and control implementation

- Phase 3: Implementation – Training programs, documentation, and process integration

- Phase 4: Validation – Internal audits, corrective actions, and certification preparation

Resource Allocation and Budget Planning

Smart resource allocation is essential for successful ISO 42001 implementation. Your organisation should strategically allocate financial, human, physical, and technological resources across AI projects. Key considerations include:

- Strategic planning: Determine what each department and project needs

- Capacity assessment: Evaluate if current resources match future demands

- Risk-based allocation: Focus resources on high-priority tasks first

- Training investment: Allocate money to build AI governance capabilities

This implementation roadmap will help your organisation build resilient infrastructure for ISO 42001 compliance and encourage responsible AI development and use.

Implementing Core AIMS Controls and Processes

Your organisation needs core controls across several critical domains to implement ISO 42001 effectively when setting up your AIMS. These controls help you manage AI ethically and effectively throughout its lifecycle.

Data Governance and Quality Management

Trustworthy AI systems depend on effective data governance as their lifeblood. Organisations must document information about data resources employed for AI systems under ISO/IEC42001:202313. This process ensures data quality, security, and regulatory compliance.

A robust data governance plan needs:

- Documentation of data sources, preparation methods, and quality parameters

- Data validation and cleaning processes with regular audits

- Complete records of data origin and changes

- Security measures to protect data integrity and privacy

AI System Life Cycle Management

AI systems need constant attention throughout their lifecycle. ISO 42001 requires organisations to define and document verification and validation measures with specific usage criteria15. The process covers planning, testing, monitoring, performance optimisation, and final system retirement.

Your lifecycle management should include documented procedures for each phase from development to retirement. The system should automatically record events logs over its lifetime16. These logs must record usage periods, reference databases, and individuals involved in verification processes.

Third-Party AI Provider Management

Most organisations work with external partners to implement AI. ISO 42001 requires processes that ensure services or products from suppliers align with responsible AI development principles17. You need to conduct due diligence, monitor continuously, and regularly evaluate third-party AI providers.

Clear documentation of roles and responsibilities facilitates effective supplier relationship management. Detailed records of supplier evaluations, agreements, and performance assessments ensure accountability and transparency.

Documentation and Record-Keeping Requirements

A complete documentation system forms the foundation of AIMS compliance. Your records must be "legible, easily identifiable, and retrievable" with clear procedures for identification, storage, protection, retrieval, retention, and disposal18.

ISO 42001 mandates documentation of AI system design, development plans, deployment strategies, and technical specifications15. Your organisation should maintain an AI system technical documentation portfolio that is accessible to stakeholders, users, partners, and supervisory authorities.

Version control prevents the use of outdated information and ensures that only the most current versions are accessible to users19.

| Benefit | Description | Business Impact |

| Clear Governance Structure | Established framework for AI development and deployment | Improved decision-making and accountability |

| Standardised Risk Management | Systematic procedures for risk assessment and mitigation | Reduced liability and improved security |

| Data Quality Management | Robust processes ensuring data integrity | More reliable AI outputs and decisions |

| Third-Party Oversight | Systematic monitoring of external AI providers | Reduced supply chain risks |

| Comprehensive Documentation | Complete record-keeping requirements | Enhanced transparency and auditability |

Measuring and Validating ISO 42001 Compliance

Proving your AI Management System is effective requires resilient measurement and compliance checks to ensure AI operates reliably. A good evaluation combines internal reviews with preparation for external certification, creating an ongoing improvement cycle.

Internal Audit Procedures for AIMS

Internal audits act as key practice runs before formal certification and help spot remaining problems in your AI Management System setup. These audits follow a well-laid-out five-phase approach: scoping, planning, assessment, reporting, and remediation. Here's how to run effective internal audits:

- Create a complete audit program with clear timelines and roles

- Select neutral auditors who can provide an unbiased view of your AIMS

- Maintain records of all management review meetings and decisions for audit purposes

- Use standard forms to track non-conformities and corrective actions

Performance Metrics and KPIs for Trustworthy AI

You need reliable, valid and practical measurement tools to monitor AIMS effectiveness and compare different setup strategies. These measurement qualities matter:

- Substantive validity: How well metrics reflect the AI constructs they claim to measure

- Reliability: How consistent measurement items are across evaluation periods

- Known-groups validity: How sensitive they are to planned differences between control groups

- Test-retest reliability: How stable measurements remain over time

- Sensitivity to change: How well they detect when things get better or worse

Preparing for External Certification

After thorough internal prep work, the next step would be to work with an accredited certification body for formal ISO 42001 verification. The certification process involves these steps:

- Choose a trusted certification body with the right expertise

- Hold pre-certification meetings to define scope and process

- Keep all AIMS documentation current and available

- Appoint a team member to be the main contact

Conclusion

ISO 42001 provides organisations with a well-laid-out approach to manage artificial intelligence responsibly. This comprehensive framework tackles major challenges businesses face, especially when dealing with security threats and policy issues that often hinder AI adoption.

Organisations that follow ISO 42001 gain the following important benefits:

- Clear governance structures for AI development and deployment

- Standard risk assessment and mitigation procedures

- Resilient data quality management processes

- Systematic oversight of third-party providers

- Complete documentation requirements

Regular measurement and verification are the lifeblood of a working AIMS. Organisations can track their progress and identify areas to improve through internal audits and clear performance metrics. External certification provides tangible validation of these efforts and shows your dedication to responsible AI practices.

As AI technology continues to evolve, management systems that comply with ISO 42001 will become crucial for organisations seeking to build trust while maximising the benefits of AI. This standard empowers you to navigate AI challenges with confidence and ensures ethical, responsible implementation.

References

- https://www.bdo.com/insights/assurance/iso-42001-helping-to-build-trust-in-ai

- https://www.iso.org/standard/81230.html

- https://pecb.com/article/a-comprehensive-guide-to-understanding-the-role-of-isoiec-42001

- https://www.linkedin.com/pulse/iso-42001-vs-other-ai-standards-whats-oyyhc

- https://www.isms.online/iso-42001/vs-iso-27001/

- https://www.isms.online/iso-42001/requirement-3-terms-definitions/

- https://www.artificialintelligence-news.com/news/ai-governance-gap-95-of-firms-havent-frameworks/

- https://www.isms.online/iso-42001/ai-management-system-aims/

- https://gdprlocal.com/artificial-intelligence-risk-management-a-practical-step-by-step-guide/

- https://www.metaverse.law/2025/01/30/what-is-an-ai-risk-assessment-and-how-is-one-conducted/

- https://www.osler.com/en/insights/updates/the-role-of-iso-iec-42001-in-ai-governance/

- https://www.schellman.com/blog/iso-certifications/should-you-get-an-iso-42001-gap-assessment

- https://www.isms.online/iso-42001/

- https://www.isms.online/iso-42001/annex-a-controls/a-7-data-for-ai-systems/

- https://www.siscertifications.com/wp-content/uploads/2024/05/List-of-documents-required-for-ISO-42001-documentation.pdf

- https://artificialintelligenceact.com/article-12-record-keeping/

- https://www.isms.online/iso-42001/annex-a-controls/a-10-third-party-and-customer-relationships/

- https://www.icao.int/SAM/Documents/AISAIM2011/SAMAIM4_item3_wp04.pdf

- https://www.ideagen.com/thought-leadership/blog/implementing-a-quality-management-system-best-practice