Artificial intelligence has become deeply embedded in the operations of modern financial institutions. [1] From fraud detection and risk scoring to customer engagement and portfolio optimisation, AI now influences both strategic decision-making and everyday operations across the sector. [2] [3] Yet the growing sophistication of AI technologies, especially the rise of generative AI and autonomous AI agents, has also introduced an entirely new class of risks. [4] [5] These range from unpredictable model behaviour, hallucinated or inaccurate outputs, and hidden bias, to data leakage, intellectual property concerns, and novel cybersecurity vulnerabilities. In response to these developments, the Monetary Authority of Singapore (MAS) has released its proposed Guidelines on Artificial Intelligence Risk Management (AIRG), which set comprehensive supervisory expectations for how financial institutions should govern, deploy, and oversee AI.

The AIRG builds upon Singapore’s existing regulatory architecture, including the FEAT principles and the Model AI Governance Framework, and operates alongside other MAS guidance, such as technology risk management guidelines and information papers on AI threats. [6] It also aligns with Singapore’s broader data protection landscape, particularly the principles embodied in the Personal Data Protection Act (PDPA). [7] Though the AIRG is not a data protection guideline in its own right, it reinforces many of the same values (transparency, accountability, accuracy, and responsible data use) underpin the PDPA.

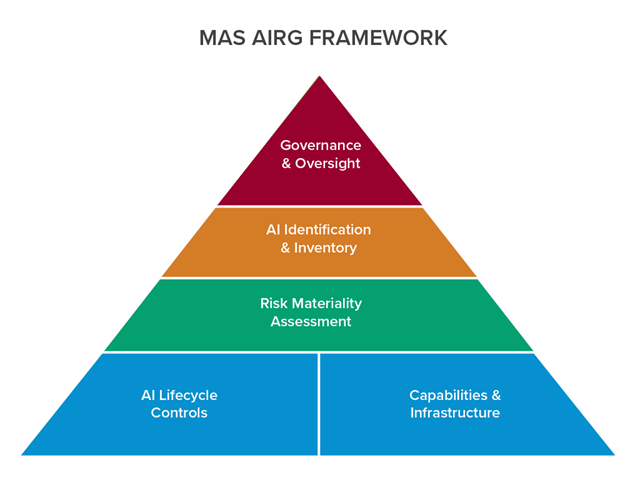

At the centre of the AIRG is a recognition that AI systems are no longer experimental or peripheral tools. They have become integral to financial institutions’ business processes and, in some cases, central to decision-making that directly affects customers’ financial well-being. MAS therefore places significant responsibility on the Board and senior management to establish the institutional conditions necessary for safe and responsible AI use.[8] [9] This includes defining the organisation’s AI risk appetite, embedding AI considerations within enterprise-wide risk management frameworks, ensuring proper coordination across all relevant functions, and fostering a culture that treats AI with the same level of seriousness as other material risks. Governance, under the AIRG, is not an isolated technical function but an organisational mandate.

One of the most important conceptual shifts introduced by the AIRG is the emphasis on identifying AI usage throughout the institution. MAS notes that financial institutions cannot meaningfully govern AI if they cannot reliably detect where it is being used. [10] This is particularly relevant today, as generative AI tools become readily available to employees, sometimes without formal approval or oversight. MAS therefore expects institutions to develop clear definitions and criteria for what constitutes AI, supported by mechanisms to ensure consistent identification. This expectation extends beyond data science teams to include business units, operational teams, and even customer-facing functions. The outcome is a centralised AI inventory that serves as a record of all AI models, systems, and use cases. This inventory forms the backbone of subsequent risk assessments and governance processes, ensuring institutions have a clear view of their AI landscape. [11]

Building on this foundation, MAS requires institutions to conduct risk materiality assessments for each AI system or use case. The assessment considers the impact of the AI system on customers, business operations, and the institution’s risk profile, the complexity of the technology, including explainability and data dependencies and the level of reliance placed on the AI’s outputs, particularly where decisions are automated or made at scale. Unlike traditional model governance, which often examines statistical models with relatively predictable behaviour, AI systems may evolve, adapt, or drift over time. They also interact with environments and datasets that were not present during their training. MAS’s approach, therefore, seeks to capture the dynamic nature of AI risks by ensuring that institutions understand not only what their AI systems do, but also how they behave under different conditions and the level of autonomy they are granted.

Figure 1: MAS AI Risk Management Framework

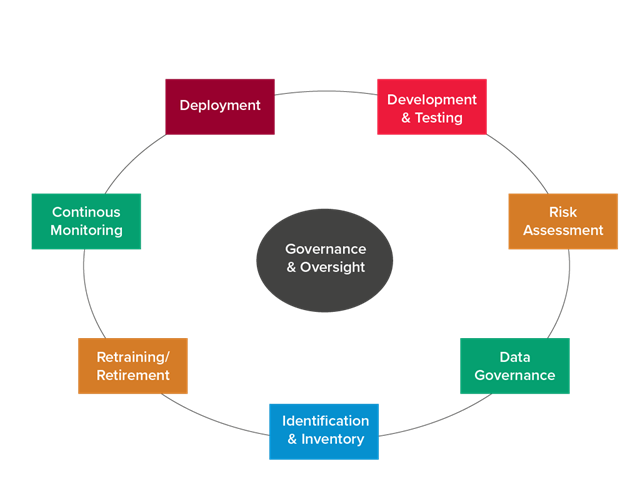

The AIRG also dedicates significant attention to the AI lifecycle, recognising that risks do not end once a model is deployed. Instead, they emerge, evolve, and sometimes intensify over time. Data governance plays a central role here. MAS highlights the importance of using representative, high-quality, and fit-for-purpose data; implementing strict data security and privacy controls; maintaining accurate data lineage; and ensuring that data used for training or inference is handled responsibly. These expectations naturally intersect with the PDPA, which requires organisations to ensure the accuracy, protection, and appropriate use of personal data. Although the PDPA was not originally written with modern AI systems in mind, its principles remain applicable, and MAS’s focus on robust data governance strengthens institutions’ ability to fulfil both regulatory regimes simultaneously.

Transparency and explainability form another key theme of the AIRG. MAS recognises that AI systems, especially those based on deep learning or generative models, may produce outputs that are difficult for humans to understand or justify. Yet explainability is vital for accountability, both within institutions and toward customers. The AIRG, therefore, expects institutions to determine the appropriate level of explainability based on the use case and its potential impact. Models used in areas such as credit underwriting, financial advisory, and insurance must be explainable enough to allow for meaningful challenge and customer recourse. This expectation aligns with the PDPA’s growing emphasis on transparency in automated decision-making, creating a cohesive framework that promotes clarity and accountability across all stages of AI deployment.

Figure 2: AI System Lifecycle with Continuous Feedback. All stages provide feedback to governance and each other.

A further area of alignment between the AIRG and the PDPA lies in the handling of third-party AI systems. [12] Many financial institutions rely on external AI vendors, cloud-based LLMs, open-source models, or third-party APIs. MAS stresses that institutions remain fully accountable for the risks arising from these external relationships. This includes assessing vendor transparency, understanding supply chain dependencies, and managing the risks of concentration or over-reliance on a small number of dominant providers. The PDPA similarly emphasises accountability even external partners process personal data. In practice, the AIRG reinforces this requirement by encouraging institutions to demand robust disclosures, negotiate appropriate contractual safeguards, and ensure that third-party AI systems meet the same risk-management standards as internal systems.

Figure 3: Singapore’s AI Regulatory Ecosystem

Figure 3: Singapore’s AI Regulatory Ecosystem

Key relationships - Red: Primary Guidelines | Green: Supporting Frameworks | Teal: Foundational Principles

Beyond deployment, MAS requires institutions to maintain continuous monitoring of AI systems, including detecting data drift, model drift, unusual behaviour, or deterioration in performance. This reflects a recognition that AI does not remain static. Its accuracy and fairness may degrade over time, especially as customer behaviour, market conditions, or external environments change. Monitoring is therefore essential to prevent unintended consequences, inaccurate outputs, or discriminatory patterns from emerging unnoticed. Institutions must be prepared to intervene when necessary, including retraining models and adjusting thresholds.

Although the AIRG introduces many new expectations, its overarching goal is not to limit innovation. Instead, MAS seeks to foster responsible, safe, and trustworthy AI adoption by embedding safeguards that match the sophistication of modern AI systems. The Guidelines’ proportionate nature ensures that institutions retain flexibility. AI systems that are low-risk, assistive, or peripheral may be governed with lighter controls, while high-risk, mission-critical systems receive more rigorous oversight. This balanced approach supports innovation while maintaining financial stability and consumer protection.

From a broader perspective, the AIRG represents an evolution in Singapore’s regulatory philosophy. Rather than addressing AI risks through narrow, prescriptive rules, MAS is shaping an ecosystem of trust, accountability, and resilience. Its integration with the PDPA underscores the interconnectedness of AI governance and data protection.

With ongoing advances in AI, the lines separating data, algorithms, and decision-making processes will become increasingly blurred. Regulatory expectations will continue to evolve in response. The AIRG is therefore an important milestone in Singapore’s broader journey toward becoming a leader in the safe and responsible deployment of AI. For financial institutions, this means AI is no longer simply a technological tool. It is a strategic capability accompanied by new responsibilities, and effective governance is essential to ensuring that its benefits are realised without compromising trust, fairness, or security.

References:

[1] MAS AIRG Consultation Paper - https://www.mas.gov.sg/publications/consultations/2025/consultation-paper-on-guidelines-on-artificial-intelligence-risk-management

[2] Kurshan et al. (2020) Towards Self-Regulating AI: Challenges and Opportunities of AI Model Governance in Financial Services - https://arxiv.org/abs/2010.04827

[3] Zhang & Zhou (2019) Fairness Assessment for Artificial Intelligence in Financial Industry - https://arxiv.org/abs/1912.07211

[4] MAS Guidelines for Artificial Intelligence (AI) Risk Management - https://www.mas.gov.sg/news/media-releases/2025/mas-guidelines-for-artificial-intelligence-risk-management?utm_source=chatgpt.com

[5] Mirishli (2025) Regulating Ai In Financial Services: Legal Frameworks And Compliance Challenges- https://arxiv.org/abs/2503.14541

[6] MAS AIRG Consultation Paper - https://www.mas.gov.sg/publications/consultations/2025/consultation-paper-on-guidelines-on-artificial-intelligence-risk-management?utm_source=chatgpt.com

[7] PDPC Model AI Governance Framework - https://www.pdpc.gov.sg/help-and-resources/2020/01/model-ai-governance-framework

[8] MAS AIRG Consultation Paper - https://www.mas.gov.sg/publications/consultations/2025/consultation-paper-on-guidelines-on-artificial-intelligence-risk-management

[9] MLex - https://www.mlex.com/mlex/artificial-intelligence/articles/2410506

[10] GRC Report - https://www.grcreport.com/post/singapore-sets-out-new-guidelines-to-strengthen-ai-risk-management-in-financial-sector

[11] MAS AIRG Consultation Paper - https://www.mas.gov.sg/publications/consultations/2025/consultation-paper-on-guidelines-on-artificial-intelligence-risk-management

[12] PDPC Model AI Governance Framework - https://www.pdpc.gov.sg/help-and-resources/2020/01/model-ai-governance-framework