According to National AI Strategy1 published by Smart Nation Singapore, AI today represents the next frontier of technological opportunities. Often, the bottleneck to reaping the full benefits of AI is not the readiness of the technology, but our ability to redesign processes, systems, and regulations to deploy them efficiently and responsibly.

Generative artificial intelligence, commonly known as Generative AI, is the talk of the town in today’s rapidly changing technological development business world. It refers to the use of artificial intelligence to create new content such as text, images, music, audio, and videos.

According to Google Cloud AI2, Generative AI processes vast content, creating insights and answers via text, images, and user-friendly formats. Common Generative AI applications include:

• Improving customer interactions through enhanced chat and search experiences

• Exploring vast amounts of unstructured data through conversational interfaces and summarisations.

• Assisting with repetitive tasks like replying to requests for proposals (RFPs), localising marketing content in five languages, and checking customer contracts for compliance, among others.

In a report published by Trend Micro on Exploiting AI3, AI and Machine Learning (a form of AI) can support businesses, critical infrastructures, and industries, as well as help solve some of society’s biggest challenges (including the Covid-19 pandemic). However, these technologies can also enable a wide range of digital, physical, and political threats leading to harm and destruction. For enterprises and individual users vulnerable to scams by malicious actors who misuse and abuse AI to conduct scams, the risks and potential misuse and abuse of AI systems need to be identified, understood and managed.

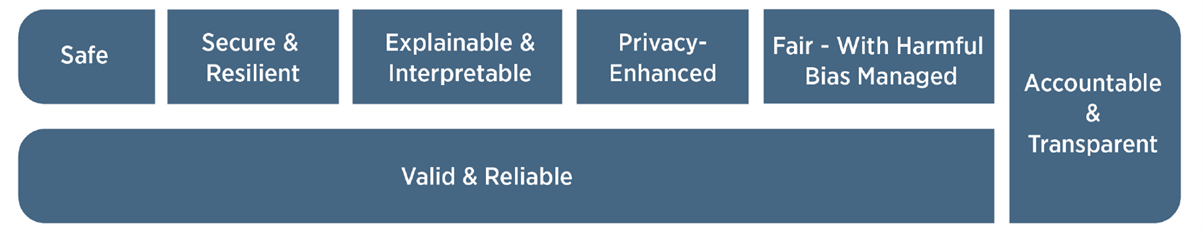

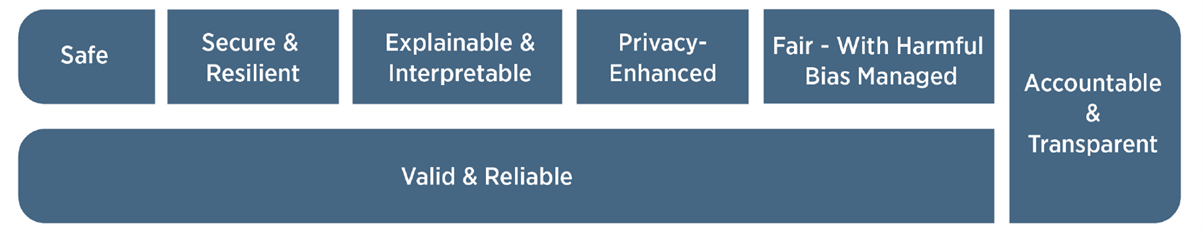

Building trust by adopting responsible AI practices, referencing the AI Risk Management Framework principles as outlined below, is important because it helps ensure that AI is used ethically and responsibly. Trustworthy AI is rooted in our understanding of how it works and its ability to deliver valuable insights and knowledge. To trust a decision being made by an AI algorithm, we need to know that it’s fair, accurate, ethical, and explainable.

Using the Getty Images example to illustrate some of the key principles mentioned above, we note that Getty Images has implemented the AI-powered generation tool with due considerations of:

• Explainability: AI system should be transparent and explainable so that users can understand how decisions are being made.

• Fairness: AI system should be designed to avoid bias and ensure that all users are treated fairly.

• Robustness: AI system should be designed to be resilient and secure against cyber attacks and other threats.

• Transparency: AI system should be transparent about its data sources, algorithms, and decision-making processes and be accountable.

• Privacy: AI system should be designed to protect user privacy and ensure that user data is not misused or mishandled.

Mistrust in AI can lead to underutilisation or disuse of AI systems, while excessive trust in limited or untested systems can result in overreliance on AI. Building trust in AI and implementing Responsible AI practices, thus, requires a strategic approach that includes mastering the quality of data used, mitigating algorithmic bias, and providing answers that are supported with evidence.

BDO can assist an enterprise in creating an AI Risk Management Framework and offer guidance for the development of responsible AI by providing a set of principles and guidelines that are safe for the enterprise to follow. This will also ensure that the enterprise's AI implementation is used for the benefit of society and not to cause harm. In turn, it will help build public confidence in the use of AI and encourage the consumption of the enterprise's AI-powered services.

2. Generate text, images, code, and more with Google Cloud AI – https://cloud.google.com/use-cases/generative-ai#generate-text-images-code-and-more-with-google-cloud-ai

3. How Cybercriminals Misuse and Abuse AI and ML - https://www.trendmicro.com/vinfo/sg/security/news/cybercrime-and-digital-threats/exploiting-ai-how-cybercriminals-misuse-abuse-ai-and-ml

4. Getty Images Launches Commercially Safe Generative AI Offering - https://newsroom.gettyimages.com/en/getty-images/getty-images-launches-commercially-safe-generative-ai-offering

5. Artificial Intelligence Risk Management Framework - https://nvlpubs.nist.gov/nistpubs/ai/nist.ai.100-1.pdf

Generative artificial intelligence, commonly known as Generative AI, is the talk of the town in today’s rapidly changing technological development business world. It refers to the use of artificial intelligence to create new content such as text, images, music, audio, and videos.

According to Google Cloud AI2, Generative AI processes vast content, creating insights and answers via text, images, and user-friendly formats. Common Generative AI applications include:

• Improving customer interactions through enhanced chat and search experiences

• Exploring vast amounts of unstructured data through conversational interfaces and summarisations.

• Assisting with repetitive tasks like replying to requests for proposals (RFPs), localising marketing content in five languages, and checking customer contracts for compliance, among others.

In a report published by Trend Micro on Exploiting AI3, AI and Machine Learning (a form of AI) can support businesses, critical infrastructures, and industries, as well as help solve some of society’s biggest challenges (including the Covid-19 pandemic). However, these technologies can also enable a wide range of digital, physical, and political threats leading to harm and destruction. For enterprises and individual users vulnerable to scams by malicious actors who misuse and abuse AI to conduct scams, the risks and potential misuse and abuse of AI systems need to be identified, understood and managed.

Responsible AI for Enterprise

From an enterprise perspective, Responsible AI is the practice of designing, developing, and deploying AI with good intention to empower employees and businesses, and fairly impact customers and society. This approach allows enterprises to engender trust and scale AI with confidence. An example of Responsible AI is Getty Images, a global leader in visual content, which has implemented stringent safeguards in its new Generative AI-powered image generation tool4, to prevent the generation of “problematic content”, demonstrating its commitment to responsible and ethical AI practices.AI Risk Management Framework

The US National Artificial Intelligence Initiative Act of 2020 (P.L. 116-283) outlined the goal of using an AI Risk Management Framework5 to help enterprises in designing, developing, deploying, or using AI systems to help manage the numerous risks associated with AI and promote the trustworthy and responsible development and use of AI systems. The Framework is intended to be voluntary, rights-preserving, non-sector-specific, and use-case agnostic, providing flexibility to organisations of all sizes and in all sectors throughout society, allowing them to implement the approaches outlined in the Framework.Building trust by adopting responsible AI practices, referencing the AI Risk Management Framework principles as outlined below, is important because it helps ensure that AI is used ethically and responsibly. Trustworthy AI is rooted in our understanding of how it works and its ability to deliver valuable insights and knowledge. To trust a decision being made by an AI algorithm, we need to know that it’s fair, accurate, ethical, and explainable.

Using the Getty Images example to illustrate some of the key principles mentioned above, we note that Getty Images has implemented the AI-powered generation tool with due considerations of:

• Explainability: AI system should be transparent and explainable so that users can understand how decisions are being made.

• Fairness: AI system should be designed to avoid bias and ensure that all users are treated fairly.

• Robustness: AI system should be designed to be resilient and secure against cyber attacks and other threats.

• Transparency: AI system should be transparent about its data sources, algorithms, and decision-making processes and be accountable.

• Privacy: AI system should be designed to protect user privacy and ensure that user data is not misused or mishandled.

Mistrust in AI can lead to underutilisation or disuse of AI systems, while excessive trust in limited or untested systems can result in overreliance on AI. Building trust in AI and implementing Responsible AI practices, thus, requires a strategic approach that includes mastering the quality of data used, mitigating algorithmic bias, and providing answers that are supported with evidence.

BDO can assist an enterprise in creating an AI Risk Management Framework and offer guidance for the development of responsible AI by providing a set of principles and guidelines that are safe for the enterprise to follow. This will also ensure that the enterprise's AI implementation is used for the benefit of society and not to cause harm. In turn, it will help build public confidence in the use of AI and encourage the consumption of the enterprise's AI-powered services.

References:

1. National Artificial Intelligence Strategy - https://file.go.gov.sg/nais2019.pdf2. Generate text, images, code, and more with Google Cloud AI – https://cloud.google.com/use-cases/generative-ai#generate-text-images-code-and-more-with-google-cloud-ai

3. How Cybercriminals Misuse and Abuse AI and ML - https://www.trendmicro.com/vinfo/sg/security/news/cybercrime-and-digital-threats/exploiting-ai-how-cybercriminals-misuse-abuse-ai-and-ml

4. Getty Images Launches Commercially Safe Generative AI Offering - https://newsroom.gettyimages.com/en/getty-images/getty-images-launches-commercially-safe-generative-ai-offering

5. Artificial Intelligence Risk Management Framework - https://nvlpubs.nist.gov/nistpubs/ai/nist.ai.100-1.pdf